(이번 프로젝트 코드는 패캠 딥러닝 강의를 참고한 코드이다)

<이전 포스팅>

https://silvercoding.tistory.com/4

[MNIST 프로젝트] 2. MNIST 데이터셋 전처리, 시각화

(이번 프로젝트 코드는 패캠 딥러닝 강의를 참고한 코드이다) <이전 포스팅> https://silvercoding.tistory.com/3 [MNIST 프로젝트] 1. MNIST 데이터 알아보기 (이번 프로젝트 코드는 패캠 딥러닝 강의를 참고한

silvercoding.tistory.com

Noise 추가하기

https://www.tensorflow.org/tutorials/images/data_augmentation

데이터 증강 | TensorFlow Core

개요 이 튜토리얼에서는 이미지 회전과 같은 무작위(그러나 사실적인) 변환을 적용하여 훈련 세트의 다양성을 증가시키는 기술인 데이터 증강의 예를 보여줍니다. 두 가지 방법으로 데이터 증

www.tensorflow.org

우선 Data augmentation 은 무작위 변환을 적용하여 훈련 세트의 다양성을 증가시키는 기술이다.

이 사진과 같이 사람 눈에는 회전을 하든 확대를 하든 같은 꽃이라는 걸 판별할 수 있지만, 컴퓨터 입장에서는 서로 다른 사진으로 입력된다는 것이다. 따라서 이러한 무작위 변형을 시켜 훈련세트의 다양화를 하고자 한다.

이 글에서는 MNIST에 이러한 Noise를 입힌 데이터를 사용할 것이다.

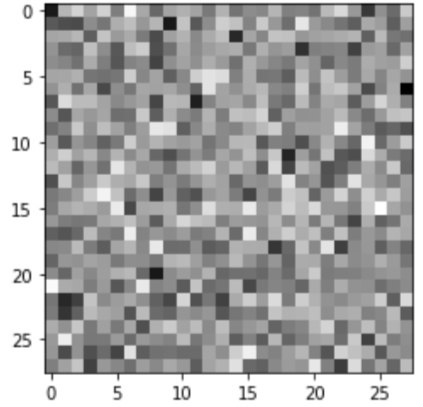

(1) (28, 28) 크기의 랜덤 노이즈 생성하기

- np.random.random

print(np.random.random((2, 2))) np.random.random() 함수를 사용하면 0-1사이의 실수가 나오게 된다. 괄호 안에 사이즈를 입력해주면

이렇게 (2, 2) 형태로 랜덤값이 나오는 것을 알 수 있다.

np.random.random((28,28)).shape따라서 이렇게 해주면 (28, 28) 사이즈의 랜덤 노이즈가 생성된다.

이를 plt.imshow()에 넣어 확인해보면 위에서 보았던 노이즈 그림을 볼 수 있을 것이다.

그런데 위에서 보았던 그림보다는 진하다. 노이즈를 주기엔 너무 세다.

- np.random.normal

print(np.random.normal(0.0, 0.1, (28, 28))) 그래서 np.random.normal로 평균과 표준편차를 지정해준다. 평균 0, 표준편차 0.1 로 지정해준다.

이를 그래프로 그려주면

적당한 노이즈가 생성되었다!

(2) 이미지 한장에 적용해보기

777번째 이미지에 노이즈를 씌워보자.

noisy_image = train_images[777] + np.random.normal(0.5, 0.1, (28, 28))차이를 더 선명히 보기 위해 평균을 0.5로 준다.

그래프를 그려보니 노이즈가 생겼지만 1이 넘는 값이 생겨버린다.

noisy_image[noisy_image > 1.0] = 1.0 그래서 1.0이 넘는 값은 1.0으로 대체한다는 코드를 작성해주면

0과 1사이의 값으로 이루어진 노이즈 이미지가 완성된다.

(3) 모든 이미지에 노이즈 적용하기

train_noisy_images = train_images + np.random.normal(0.5, 0.1, train_images.shape)

train_noisy_images[train_noisy_images > 1.0] = 1.0

test_noisy_images = test_images + np.random.normal(0.5, 0.1, test_images.shape)

test_noisy_images[test_noisy_images > 1.0] = 1.0최종적으로 train이미지와 test이미지 모두 노이즈를 적용시키는 코드이다.

저번시간에 여러장의 이미지를 한번에 시각화하는 방법을 사용하여 첫 5개의 이미지를 출력해보면 다음과 같이 정상적으로 나오는 것을 볼 수 있다.

드디어

모델링 하기

(1) 모델링 준비 - 라벨 원핫인코딩 작업 (배치사이즈,) -> (배치사이즈, 클래스 개수)

(60000,) (10000,) 의 형태였던 라벨을 (60000, 10) (10000, 10) 의 형태로 one-hot encoding 해줄 것이다.

from keras.utils import to_categorical

train_labels = to_categorical( train_labels, 10)

test_labels = to_categorical( test_labels, 10) keras.utils의 to_categorical을 import 하여 사용한다. to_categorical(원핫인코딩할 라벨, 클래스 개수) 이렇게 사용하면 된다.

(2) simpleRNN classification 모델 생성

from keras.layers import simpleRNN

from keras.layers import Dense, Input

from keras.models import Model

inputs = Input(shape=(28, 28))

x1 = simpleRNN(64, activation="tanh")(inputs)

x2 = Dense(10, activation="softmax")(x1)

model = Model(inputs, x2)keras.layers의 simpleRNN으로 모델 생성을 한다. activation 함수는 각각 tanh, softmax로 구성이 되어있다.

model.summary()

summary함수를 이용하여 요약정보를 얻어올 수 있다. 파라미터의 개수와 아웃풋 shape을 알 수 있다.

(3) loss, optimizer, metrics 설정

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics = ["accuracy"])

compile 함수를 이용하여 손실함수는 categorical crossentropy, optimizer 는 adam, 지표는 정확도로 설정해 준다.

(4) 학습시키기

hist = model.fit(train_noisy_images, train_labels, validation_data=(test_noisy_images, test_labels), epochs=5, verbose=2)다른 건 다 예상 가능하지만 verbose는 무엇인지 잘 모르겠어서 찾아보았다.

verbose: 'auto', 0, 1, or 2. Verbosity mode. 0 = silent, 1 = progress bar, 2 = one line per epoch. 'auto' defaults to 1 for most cases, but 2 when used with ParameterServerStrategy. Note that the progress bar is not particularly useful when logged to a file, so verbose=2 is recommended

<출처>

https://keras.io/api/models/model_training_apis/

Keras documentation: Model training APIs

Model training APIs compile method Model.compile( optimizer="rmsprop", loss=None, metrics=None, loss_weights=None, weighted_metrics=None, run_eagerly=None, steps_per_execution=None, **kwargs ) Configures the model for training. Arguments optimizer: String

keras.io

** 비교해보기

- verbose = 1

- verbose = 2

(5) 학습 결과 확인

plt.plot(hist.history['accuracy'], label='accuracy') plt.plot(hist.history['loss'], label='loss') plt.plot(hist.history['val_accuracy'], label='val_accuracy') plt.plot(hist.history['val_loss'], label='val_loss') plt.legend(loc='upper left') plt.show()

학습한 결과를 그래프로 그려보았을 때 정확도는 매우 높고 오류는 매우 낮은 걸 볼 수 있다. 간단한 RNN모델로 구현을 하여도 성능이 괜찮다!

--- 완성된 모델에 test 이미지 한장으로 결과 확인해보기

res = model.predict( test_noisy_images[777:778] ) 777번째 이미지를 확인해보자.

plt.bar(range(10), res[0], color='red') plt.bar(np.array(range(10)) + 0.35, test_labels[777]) plt.show()

red가 예측한 확률, blue가 정답이다. 보면 1로 잘 예측했지만, 7과 8로 예측한 것이 미세하게 보인다. 성능은 나쁘지 않아보인다.

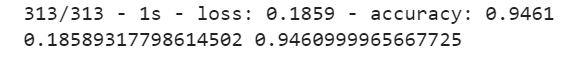

(6) 테스트 데이터셋으로 평가하기

loss, acc = model.evaluate(test_noisy_images, test_labels, verbose=2) print(loss, acc)evaluate에 테스트 데이터셋을 넣어주면 된다.

정확도 95%로 모델 평가까지 마쳤다.

(7) 모델 저장하고 불러오기

# 모델 저장

model.save("./mnist_rnn.h5")# 모델 불러오기

new_model = tf.keras.models.load_model('./mnist_rnn.h5')h5로 저장해주면 된다.

** 혹시 코랩으로 했다면, 코랩에 저장된 모델을 컴퓨터에 저장하는 코드

from google.colab import files

files.download('./mnist_rnn.h5')

'데이터 분석 이론 > 딥러닝' 카테고리의 다른 글

| [celeba 프로젝트] 2. celeba 데이터셋 전처리, 시각화 (0) | 2021.06.07 |

|---|---|

| [celeba 프로젝트] 1. celeba 데이터 살펴보기 (0) | 2021.06.07 |

| [MNIST 프로젝트] 2. MNIST 데이터셋 전처리, 시각화 (0) | 2021.06.07 |

| [MNIST 프로젝트] 1. MNIST 데이터 알아보기 (0) | 2021.06.07 |

| 딥러닝에 대하여 (0) | 2021.05.24 |