러닝스푼즈 수업 정리

<이전 글>

https://silvercoding.tistory.com/70

[FIFA DATA] 2019/2020 시즌 Manchester United 에 어떤 선수를 영입해야 하는가?, EDA 과정

러닝스푼즈 수업 정리 < 이전 글 > https://silvercoding.tistory.com/69 https://silvercoding.tistory.com/67 https://silvercoding.tistory.com/66 https://silvercoding.tistory.com/65 https://silvercoding...

silvercoding.tistory.com

1. 데이터 소개 & 데이터 불러오기

<Rossmann Store Sales>

https://www.kaggle.com/c/rossmann-store-sales/data?select=test.csv

Rossmann Store Sales | Kaggle

www.kaggle.com

해당 링크의 캐글 대회에서 사용되었던 로스만 데이터이다.

- train.csv - historical data including Sales

- test.csv - historical data excluding Sales

- sample_submission.csv - a sample submission file in the correct format

- store.csv - supplemental information about the stores

본 포스팅에서는 축소된 데이터를 사용하여 상점의 매출 예측을 진행한다.

(데이터: 러닝스푼즈 제공)

import os

import pandas as pdos.chdir('../data')train = pd.read_csv("lspoons_train.csv")

test = pd.read_csv("lspoons_test.csv")

store = pd.read_csv("store.csv")lspoons_train.csv - 학습 데이터

lspoons_test.csv - 예측해야 할 test 데이터

store.csv - 상점에 대한 정보가 담긴 보조 데이터

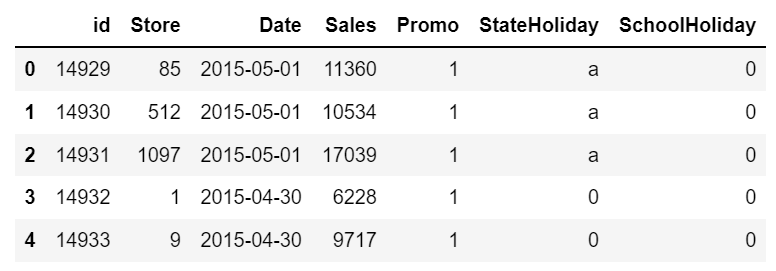

train.head()

컬럼 정보

- id

- Store: 각 상점의 id

- Date: 날짜

- Sales: 날짜에 따른 매출

- Promo: 판매촉진 행사 진행 여부

- StateHoliday: 공휴일 여부/ 공휴일 X-> 0, 공휴일-> 공휴일의 종류(a, b, c)

- SchoolHoliday: 학교 휴일인지 여부

위의 컬럼들을 사용하여 Sales(매출) 을 예측하는 모델을 생성한다.

- 분석 절차 수립

1. 베이스 모델링 ( feature engineering - 변수선택 - 모델링 )

2. 2차 모델링 ( store 데이터 merge - feature engineering - 변수 선택 - 모델링 )

3. 파라미터 튜닝

... 모델링 반복 ( 이 후 모델링은 자율, 깃헙 정리 )

1. 베이스 모델링

: 가장 기본적인 모델을 만든다. (결측값 처리, 원핫 인코딩)

피쳐 엔지니어링이란?

- 예측을 위해 기존의 input 변수를 이용하여 새로운 input 변수 생성

- 머신러닝 예측 성능 올릴 수 있는 방법

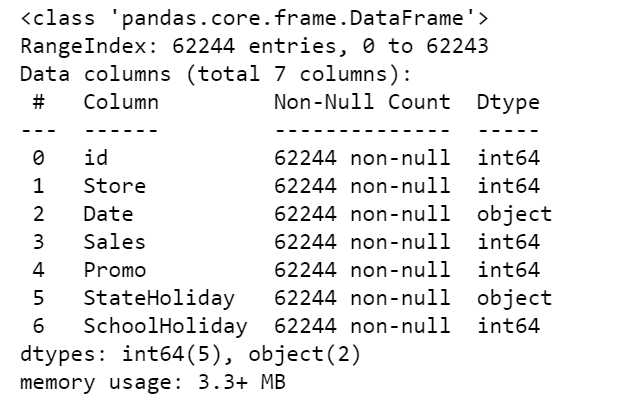

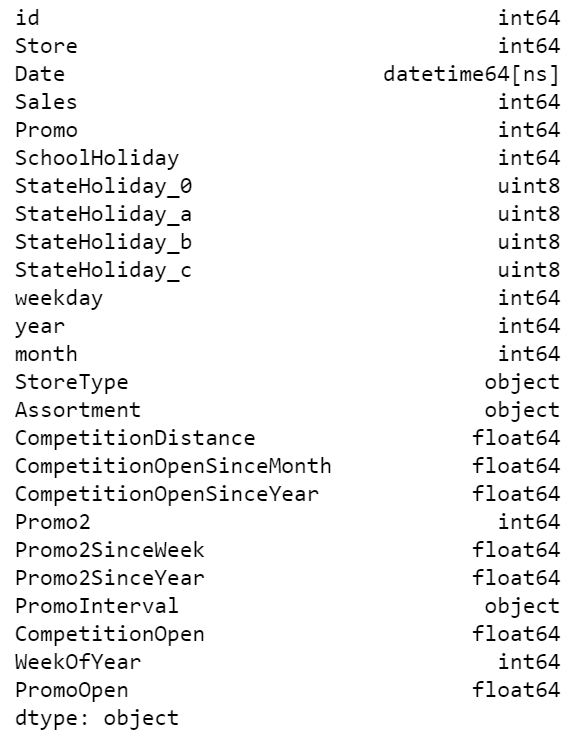

train.info()

결측값은 없는 것을 알 수 있고, object 타입인 Date, StateHoliday 컬럼을 전처리 해준다.

- StateHoliday column one-hot encoding

train = pd.get_dummies(columns=['StateHoliday'],data=train)

test = pd.get_dummies(columns=['StateHoliday'],data=test)get_dummies 함수를 사용하여 StateHoliday 컬럼을 원핫인코딩 해준다.

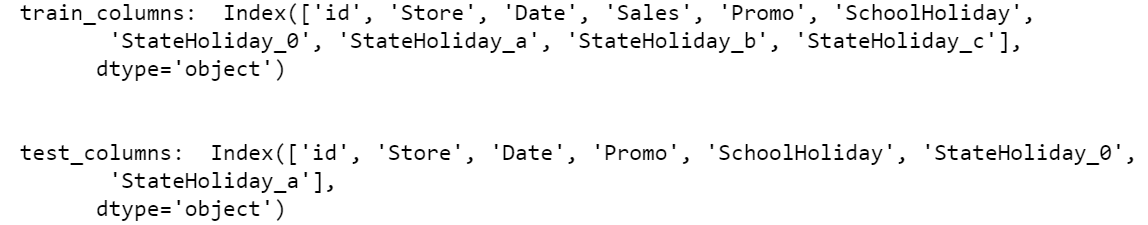

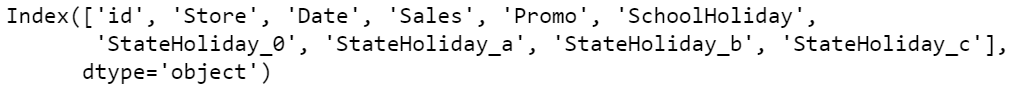

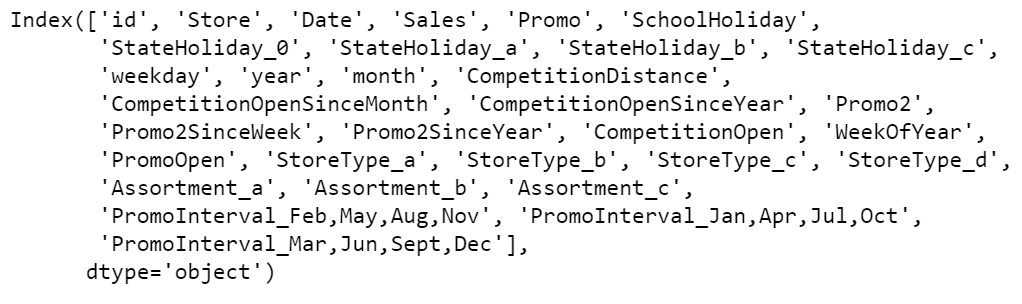

print("train_columns: ", train.columns, end="\n\n\n")

print("test_columns: ", test.columns)

새로 생성된 칼럼을 보면 train에는 b, c 가 있지만 test에는 b, c 가 존재하지 않는다. 이 경우 학습 과정에서 문제가 발생할 수 있다.

test['StateHoliday_b'] = 0

test['StateHoliday_c'] = 0따라서 같은 칼럼을 test 데이터셋에 생성해 준다.

- feature engineering using Date column

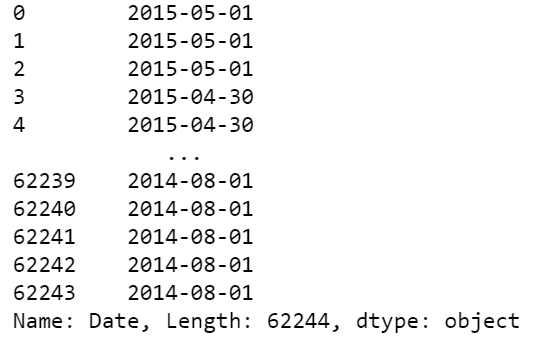

train['Date']

Date 칼럼은 날짜형 형태로 되어 있지만 dtype이 object이므로 날짜로서의 의미가 없다.

train['Date'] = pd.to_datetime( train['Date'] )

test['Date'] = pd.to_datetime( test['Date'] )따라서 pandas에서 날짜 계산을 편리하게 해주는 to_datetime 함수를 사용하여 날짜형 변수로 변환해 준다.

# 요일 컬럼 weekday 생성

train['weekday'] = train['Date'].dt.weekday

test['weekday'] = test['Date'].dt.weekday# 년도 컬럼 year 생성

train['year'] = train['Date'].dt.year

test['year'] = test['Date'].dt.year# 월 컬럼 month 생성

train['year'] = train['Date'].dt.year

test['year'] = test['Date'].dt.year

- 베이스라인 모델링

from xgboost import XGBRegressortrain.columns

xgb = XGBRegressor( n_estimators= 300 , learning_rate=0.1 , random_state=2020 )

xgb.fit(train[['Promo','SchoolHoliday','StateHoliday_0','StateHoliday_a','StateHoliday_b','StateHoliday_c','weekday','year','month']],

train['Sales'])

XGB 모델을 사용하여 학습을 시켜 준다.

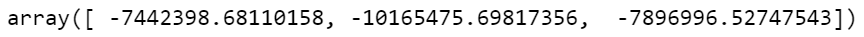

from sklearn.model_selection import cross_val_score

cross_val_score(xgb, train[['Promo', 'weekday', 'month','year', 'SchoolHoliday']], train['Sales'], scoring="neg_mean_squared_error", cv=3)

cross validation 으로 오류율을 구해보았더니 위와 같이 나왔다. 추가 작업으로 오류율을 줄여나가 보자!

cf. 캐글 제출 파일 만들기

test['Sales'] = xgb.predict(test[['Promo','SchoolHoliday','StateHoliday_0','StateHoliday_a','StateHoliday_b','StateHoliday_c','weekday','year','month']])test 데이터셋으로 학습된 모델에 넣어 예측을 진행한다.

test[['id','Sales']].to_csv("submission.csv",index=False)- 변수 선택

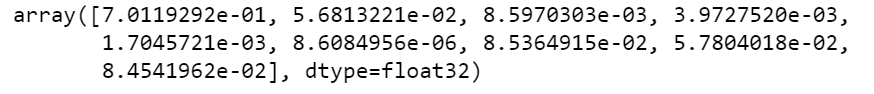

xgb.feature_importances_

feature_importances_ 를 사용하여 변수의 중요도를 알 수 있다.

input_var = ['Promo','SchoolHoliday','StateHoliday_0','StateHoliday_a','StateHoliday_b','StateHoliday_c','weekday','year','month']input_var에 Sales를 제외한 인풋 변수를 저장해 준다.

imp_df = pd.DataFrame({"var": input_var,

"imp": xgb.feature_importances_})imp_df = imp_df.sort_values(['imp'],ascending=False)

imp_df

변수 중요도 데이터프레임을 생성한 후 높은 순서대로 정렬을 해 준다. Promo가 압도적으로 변수중요도가 높은 것을 볼 수 있다. State_Holiday는 대체적으로 낮은 것으로 보인다.

import matplotlib.pyplot as plt

plt.bar(imp_df['var'],imp_df['imp'])

plt.xticks(rotation=90)

plt.show()

한눈에 보기위해 그래프를 그려 보았더니 SchoolHoliday 이후 컬럼들은 별 의미가 없어 보인다.

cross_val_score(xgb, train[['Promo', 'weekday', 'month','year', 'SchoolHoliday']], train['Sales'], scoring="neg_mean_squared_error", cv=3)

모든 컬럼을 사용했을 때 보다 오류율이 줄어들었다. 그렇다면 컬럼을 몇개 사용하는 것이 가장 오류율을 줄게 하는지 실험해 본다.

import numpy as np

score_list=[]

selected_varnum=[]for i in range(1,10):

selected_var = imp_df['var'].iloc[:i].to_list()

scores = cross_val_score(xgb,

train[selected_var],

train['Sales'],

scoring="neg_mean_squared_error", cv=3)

score_list.append(-np.mean(scores))

selected_varnum.append(i)

print(i)plt.plot(selected_varnum, score_list)

변수의 개수 별로 cross validation을 수행한 결과 2개일 때 가장 낮은 것을 볼 수 있다.

예측변수가 2개일 때 cross validation을 수행한다.

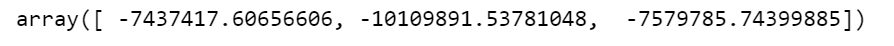

cross_val_score(xgb, train[['Promo', 'weekday']], train['Sales'], scoring="neg_mean_squared_error", cv=3)

두번째 빼고는 모두 줄어든 것을 볼 수 있다. 예측변수가 2개일 때 모델 학습을 한 후, 테스트 데이터로 제출한 캐글 스코어도 더 줄어들었다. (반복작업이므로 포스팅에서 생략)

2. 2차 모델링

- store 데이터 합병

store

store 데이터셋: 각 상점에 대한 특징을 정리한 것

컬럼 의미

- Store: 상점의 유니크한 id

- Store Type: 상점의 종류

- Assortment: 상점의 종류

- CompetitionDistance: 가장 가까운 경쟁업체 상점과의 거리

- CompetitionOpenSinceMonth: 가장 가까운 경쟁업체 오픈 월

- CompetitionOpenSinceYear: 오픈 년도

- Promo2: 지속적인(주기적인) 판매촉진 행사 여부

- Promo2SinceWeek/ promo2SinceYear: 해당 상점이 promo2를 하고있다면 언제 시작했는지

- PromoInterval: 주기가 어떻게 되는지

train = pd.merge(train, store, on=['Store'], how='left')

test = pd.merge(test, store, on=['Store'], how='left')Store 컬럼을 기준으로 train, test 데이터셋과 store 데이터셋을 합병해 준다.

- CompetitionOpen 컬럼 생성

: 경쟁업체가 언제 개장했는지 (해당 가게 이전 개장: 양수, 이후 개장: 음수)

train['CompetitionOpen'] = 12*( train['year'] - train['CompetitionOpenSinceYear'] ) + \

(train['month'] - train['CompetitionOpenSinceMonth'])

test['CompetitionOpen'] = 12*( test['year'] - test['CompetitionOpenSinceYear'] ) + \

(test['month'] - test['CompetitionOpenSinceMonth'])해당 가게가 개장한 년도에서 경쟁업체가 개장한 년도를 뺀 후 12를 곱하면 개월 수로 변환할 수 있다. 이를 해당 가게 개장 달에서 경쟁업체 개장 달의 차이와 더해주면 해당 가게를 기준으로 언제 개장했는지 알 수 있다.

- PromoOpen 컬럼 생성

: 해당 가게 개장 후 몇개월 후에 프로모션2가 시작되었는지

train['WeekOfYear'] = train['Date'].dt.weekofyear # 현재 날짜가 몇번째 주인지

test['WeekOfYear'] = test['Date'].dt.weekofyear프로모션2에 대한 날짜 정보가 년도(Year)와 주(Week)로 되어있기 때문에 Date컬럼에서 날짜가 몇번째 주인지 계산하여 WeekOfYear 컬럼에 저장해 준다.

train['PromoOpen'] = 12* ( train['year'] - train['Promo2SinceYear'] ) + \

(train['WeekOfYear'] - train['Promo2SinceWeek']) / 4

test['PromoOpen'] = 12* ( test['year'] - test['Promo2SinceYear'] ) + \

(test['WeekOfYear'] - test['Promo2SinceWeek']) / 4이전과 같이 년도를 개월수로 바꿔주고, 주를 4로 나누어 개월수로 변환해 준것을 더하여 개장 후 몇개월 뒤에 프로모션2가 진행되었는지에 대한 개월 수가 나오게 된다.

- 원핫인코딩 ( get_dummies() )

train.dtypes

데이터타입을 확인 해 보면 object인 컬럼이 3가지 있다. 3개의 컬럼을 get_dummies를 이용하여 원핫인코딩 해준다.

train = pd.get_dummies(columns=['StoreType'],data=train)

test = pd.get_dummies(columns=['StoreType'],data=test)train = pd.get_dummies(columns=['Assortment'],data=train)

test = pd.get_dummies(columns=['Assortment'],data=test)train = pd.get_dummies(columns=['PromoInterval'],data=train)

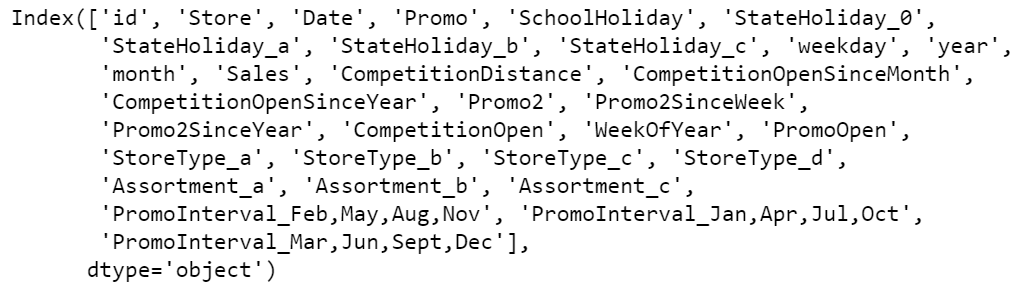

test = pd.get_dummies(columns=['PromoInterval'],data=test)train.columns

test.columns

train column과 test column 이 동일한 것을 확인하였다.

- 모델링

input_var = ['Promo', 'SchoolHoliday',

'StateHoliday_0', 'StateHoliday_a', 'StateHoliday_b', 'StateHoliday_c',

'weekday', 'year', 'month', 'CompetitionDistance',

'Promo2',

'CompetitionOpen', 'WeekOfYear',

'PromoOpen', 'StoreType_a', 'StoreType_b', 'StoreType_c', 'StoreType_d',

'Assortment_a', 'Assortment_b', 'Assortment_c',

'PromoInterval_Feb,May,Aug,Nov', 'PromoInterval_Jan,Apr,Jul,Oct',

'PromoInterval_Mar,Jun,Sept,Dec']필요없는 컬럼은 삭제하고 input_var에 저장해 준다.

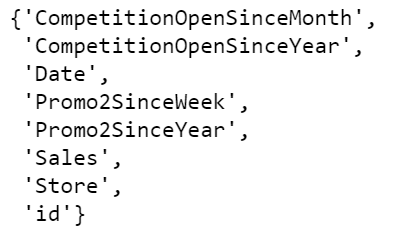

set(train) - set(input_var)

(참고) input_var에 들어가지 않은 컬럼들 목록이다.

xgb = XGBRegressor( n_estimators=300, learning_rate= 0.1, random_state=2020)

xgb.fit(train[input_var],train['Sales'])앞과 동일하게 xgb 모델을 사용한다.

cross_val_score(xgb, train[input_var], train['Sales'], scoring="neg_mean_squared_error", cv=3)

store 데이터셋을 합병하여 전처리 후 모델링을 했더니 오류율이 대폭 하락하였다.

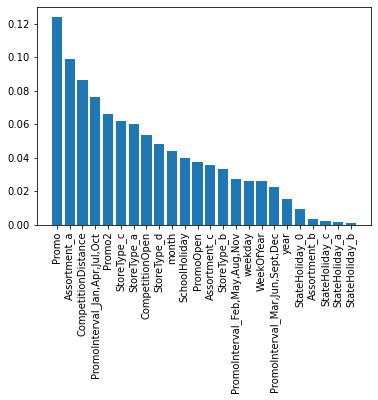

- 변수중요도

imp_df = pd.DataFrame({'var':input_var,

'imp':xgb.feature_importances_})

imp_df = imp_df.sort_values(['imp'],ascending=False)plt.bar(imp_df['var'],

imp_df['imp'])

plt.xticks(rotation=90)

plt.show()

변수중요도를 시각화 해보았더니, 모든 변수를 사용하는 것보다 선택해서 학습하는 것이 좋을 것 같다고 판단된다.

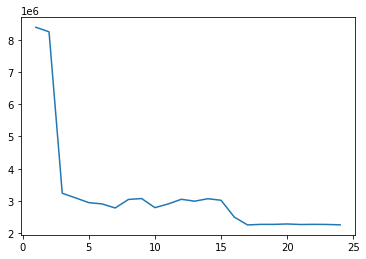

score_list=[]

selected_varnum=[]for i in range(1,25):

selected_var = imp_df['var'].iloc[:i].to_list()

scores = cross_val_score(xgb,

train[selected_var],

train['Sales'],

scoring="neg_mean_squared_error", cv=3)

score_list.append(-np.mean(scores))

selected_varnum.append(i)

print(i)plt.plot(selected_varnum, score_list)

지속적으로 하락하는 경향을 보이지만 17개 이후로 비슷한 것 같이 보인다. 따라서 17개까지 선택하여 학습을 진행해 본다.

input_var = imp_df['var'].iloc[:17].tolist()

xgb.fit(train[input_var],

train['Sales'])cross_val_score(xgb, train[input_var], train['Sales'], scoring="neg_mean_squared_error", cv=3)

전체적으로 오류율이 줄어들었다.

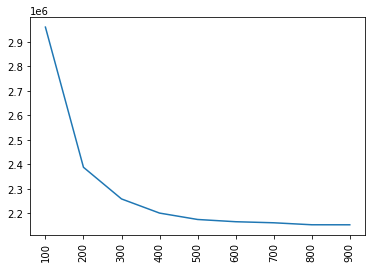

3. 파라미터 튜닝

estim_list = [100,200,300,400,500,600,700,800,900]

score_list = []for i in estim_list:

xgb = XGBRegressor( n_estimators=i, learning_rate= 0.1, random_state=2020)

scores = cross_val_score(xgb, train[input_var], train['Sales'], scoring="neg_mean_squared_error", cv=3)

score_list.append(-np.mean(scores))

print(i)plt.plot(estim_list,score_list)

plt.xticks(rotation=90)

plt.show()

n_estimators를 바꿔가며 오류율을 계산한 것을 시각화 해보았고, n_estimators=400으로 하는 것이 적당해 보인다.

xgb = XGBRegressor( n_estimators=400, learning_rate= 0.1, random_state=2020)xgb.fit(train[input_var],

train['Sales'])cross_val_score(xgb, train[input_var], train['Sales'], scoring="neg_mean_squared_error", cv=3)

400으로 변경하였더니 오류율이 낮아졌다.

아쉽게도 파라미터 튜닝을 한 이후로 캐글에서 테스트 데이터셋은 오류율이 더 높게 나왔다. 이외에 결측값, 이상치 등 feature engineering을 지속적으로 시도해 보아야겠다. (추후 github 업로드 예정)

'데이터 분석 이론 > 머신러닝' 카테고리의 다른 글

| [Home Credit data]대출 상환여부 예측 / Kaggle 데이터 (0) | 2021.10.06 |

|---|---|

| [FIFA DATA] 2019/2020 시즌 Manchester United 에 어떤 선수를 영입해야 하는가?, EDA 과정 (0) | 2021.09.06 |

| [머신러닝] 변수중요도, shap value (0) | 2021.08.27 |

| [Bank Marketing데이터 분석] 2. python 부스팅 Boosting, XGBoost 사용 (0) | 2021.08.23 |

| [Bank Marketing데이터 분석] 1. python 배깅 , 랜덤포레스트 bagging, randomforest (0) | 2021.08.23 |