러닝스푼즈 수업 정리

< 이전 글 >

https://silvercoding.tistory.com/67

[Bank Marketing데이터 분석] 2. python 부스팅 Boosting, XGBoost 사용

러닝스푼즈 수업 정리 < 이전 글 > https://silvercoding.tistory.com/66 https://silvercoding.tistory.com/65 https://silvercoding.tistory.com/64 https://silvercoding.tistory.com/63?category=967543 https..

silvercoding.tistory.com

'결론이 무엇인지' 를 설명하는 것은 데이터사이언티스트로서의 중요한 업무이다.

예측 결과만 보고는 모델이 어떤 패턴을 이용하여 예측을 실행하게 되었는지, 왜 그렇게 예측했는지 설명할 수 없다. 그렇게 되면 다른 분야의 협업자들은 신뢰를 잃게될 것이다.

비즈니스의 관점에서 예를 들어본다. 머신러닝을 통하여 영화 흥행성적을 예측하는 프로젝트에서 흥행 실패라는 예측이 나왔다고 했을 때, 어떻게 흥행실패를 막을 것이냐고 질문이 들어올 수도 있다. 기존의 취약점을 보완하지 못한다면 비즈니스의 관점에서 의미가 없다.

따라서 결과를 설명할 수 있는 것은 아주 중요하다. 이 때 변수중요도를 활용할 수 있다. 예측에 큰 영향을 미친 변수와, 특정 변수가 어떻게 영향을 미쳤는지 섬세하게 확인해볼 수 있다.

변수중요도

- 모델에 활용한 input 변수 중에서 어떤 것이 target 값에 가장 큰 영향을 미쳤나?

- 해당 중요도를 수치화시킨 것

- tree형 모델 (의사결정나무, 랜덤포레스트) 에서 계산 가능

이전 글의 tree형 모델인 random forest와 xgboost에서 변수중요도 계산을 실행했었다.

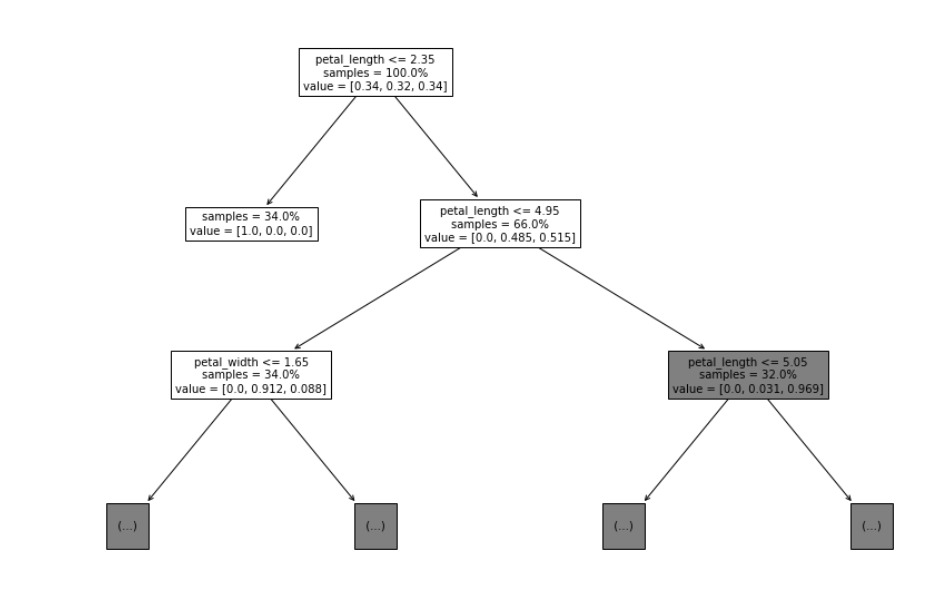

의사결정나무에서의 변수중요도

- 해당 input 변수가 의사결정나무의 구축에서 얼마나 많이 쓰이나

- 해당 변수를 기준으로 분기를 했을 때 각 구간의 복잡도가 얼마나 줄어드는가?

shapley 값

: 각 변수가 예측 결과물에 주는 영향력의 크기

: 해당 변수가 어떤 영향을 주는가

(예) 축구 선수 A , 속한 팀 B

- 각 선수가 팀 성적에 주는 영향력 크키

- 해당 선수가 어떠한 영향을 주는가

- (선수 A가 있는 팀 B의 승률) - (선수 A가 없는 팀 B의 승률 = 7%

shap value 실습

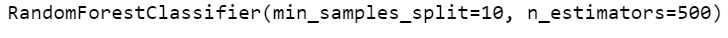

shap value 실습에 중점을 두기 위해 Xgboost 학습까지 전에 했던 그대로 실행해준다.

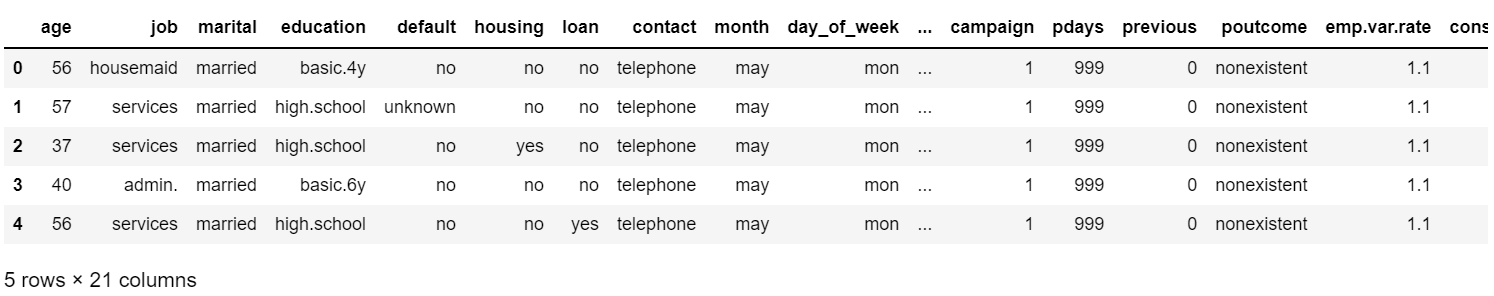

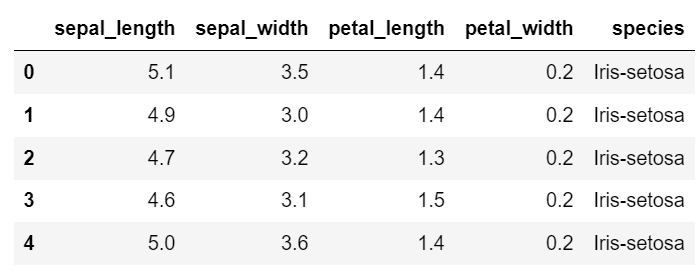

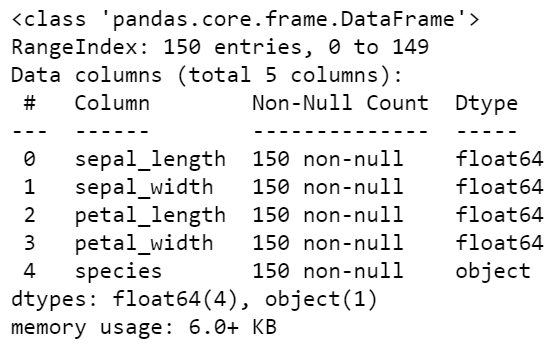

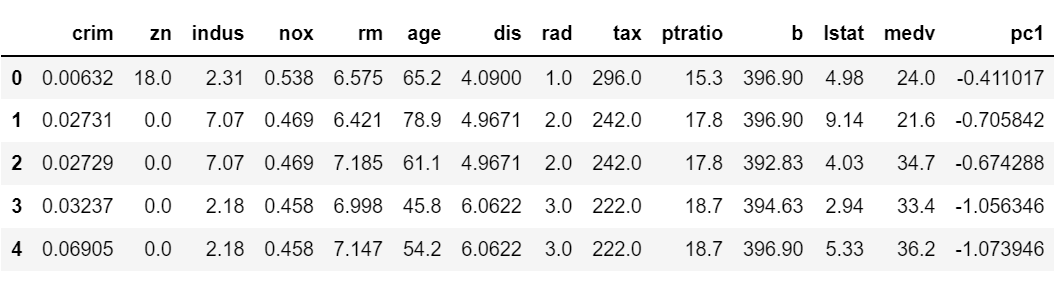

데이터 불러오기

import os

import pandas as pd

import numpy as npos.chdir('./data') # 본인 경로

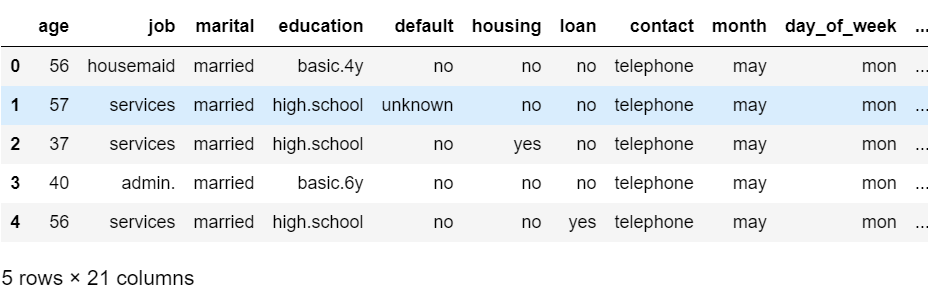

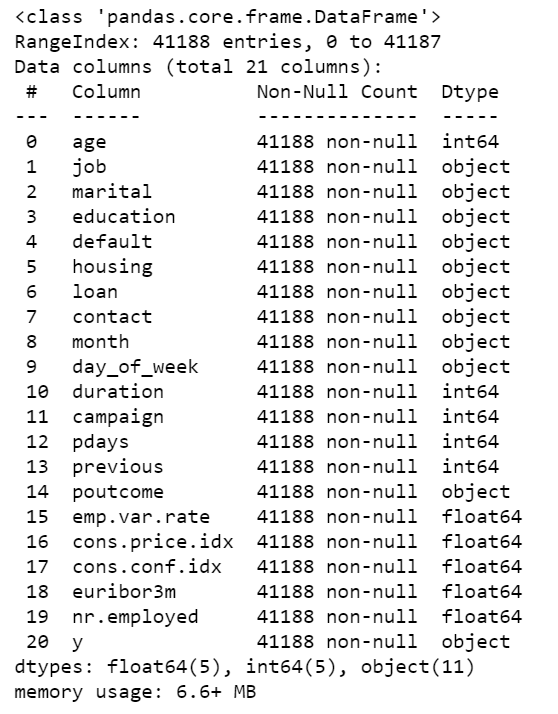

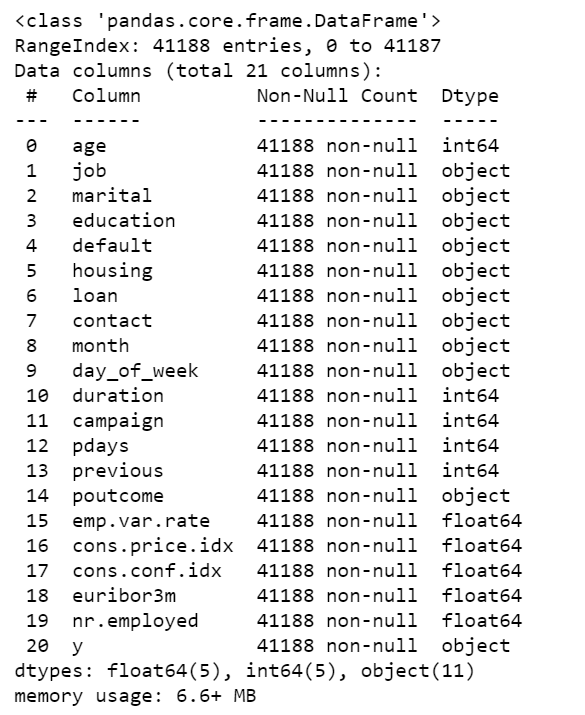

data = pd.read_csv("bank-additional-full.csv", sep = ";")이전 글에서 사용하였던 예금 가입 여부 데이터셋이다.

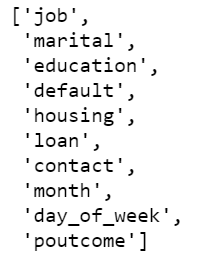

data = pd.get_dummies(data, columns = ['job','marital','education','default','housing','loan','contact','month','day_of_week','poutcome'])범주형 변수를 get_dummies를 이용하여 원핫인코딩 해준다.

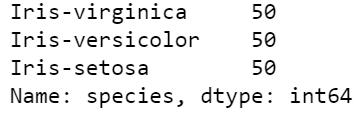

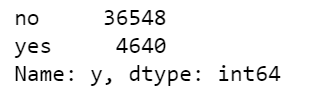

data['y'].value_counts()

분류 모델이기 때문에 목표변수도 당연히 범주형 변수로 되어있다.

data['y'] = np.where( data['y'] == 'no', 0, 1)하지만 shap value 패키지는 목표변수가 수치형이어야 잘 작동하기 때문에 수치화 시켜준다.

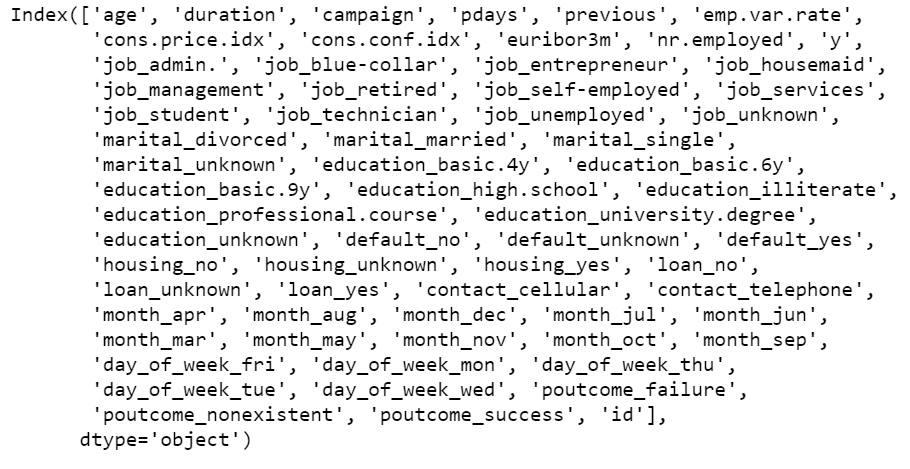

Xgboost 학습

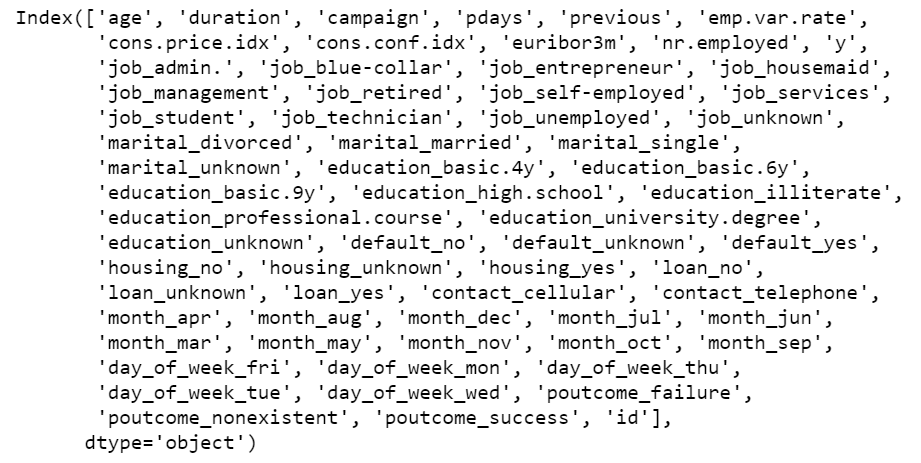

input_var = ['age', 'duration', 'campaign', 'pdays', 'previous', 'emp.var.rate',

'cons.price.idx', 'cons.conf.idx', 'euribor3m', 'nr.employed',

'job_admin.', 'job_blue-collar', 'job_entrepreneur', 'job_housemaid',

'job_management', 'job_retired', 'job_self-employed', 'job_services',

'job_student', 'job_technician', 'job_unemployed', 'job_unknown',

'marital_divorced', 'marital_married', 'marital_single',

'marital_unknown', 'education_basic.4y', 'education_basic.6y',

'education_basic.9y', 'education_high.school', 'education_illiterate',

'education_professional.course', 'education_university.degree',

'education_unknown', 'default_no', 'default_unknown', 'default_yes',

'housing_no', 'housing_unknown', 'housing_yes', 'loan_no',

'loan_unknown', 'loan_yes', 'contact_cellular', 'contact_telephone',

'month_apr', 'month_aug', 'month_dec', 'month_jul', 'month_jun',

'month_mar', 'month_may', 'month_nov', 'month_oct', 'month_sep',

'day_of_week_fri', 'day_of_week_mon', 'day_of_week_thu',

'day_of_week_tue', 'day_of_week_wed', 'poutcome_failure',

'poutcome_nonexistent', 'poutcome_success']y 컬럼을 제외한 인풋변수를 리스트에 모두 담아준다.

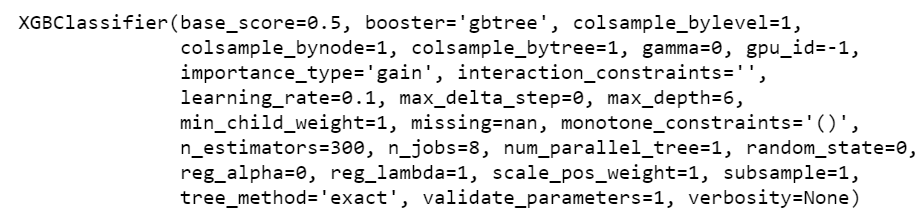

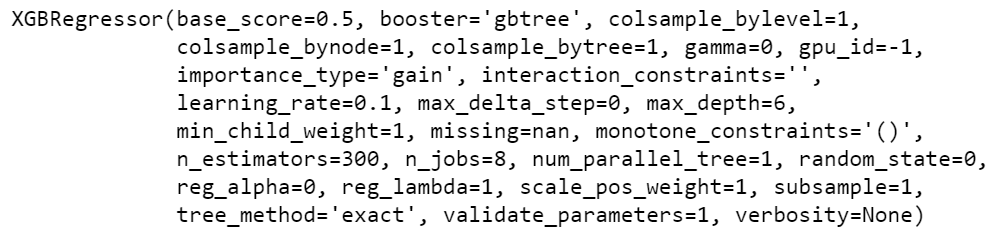

from xgboost import XGBRegressor수치형으로 예측을 진행하기 위해 XBGRegressor 회귀모델을 임포트 해준다.

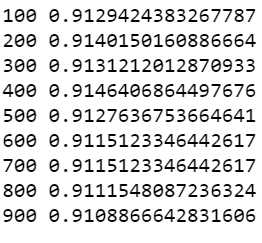

xgb = XGBRegressor( n_estimators = 300, learning_rate=0.1 )xgb.fit(data[input_var], data['y'])

Xgboost 학습을 진행한다.

Shap Value 예제

import shapshap 라이브러리를 import 해준다.

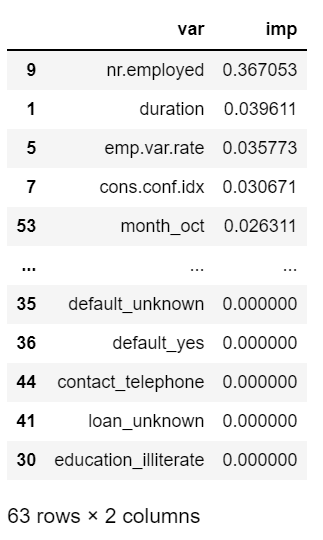

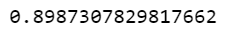

(1) 변수중요도

explainer = shap.TreeExplainer(xgb)

shap_values = explainer.shap_values( data[input_var] )shap.TreeExplainer의 인자에 학습한 모델 xgb를 넣어 객체를 저장해준다. 그다음 explainer.shap_values의 인자에 데이터셋의 인풋값을 넣어준다.

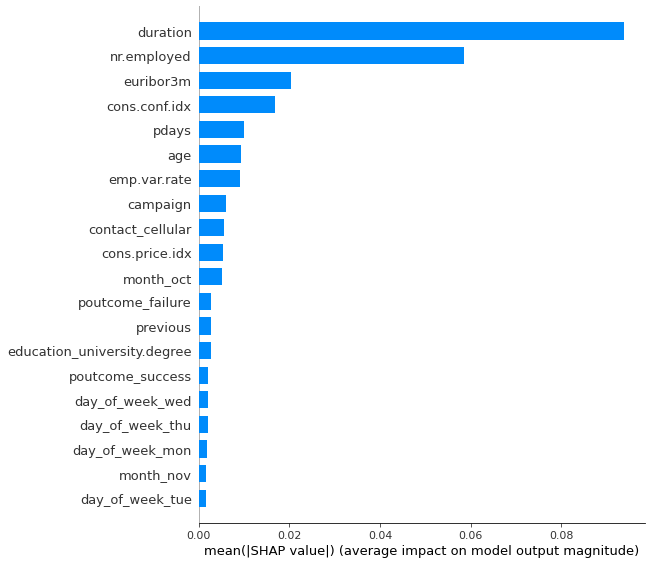

shap.summary_plot( shap_values , data[input_var] , plot_type="bar" )

shap.summary_plot을 사용하여 변수중요도 그래프를 그려준다. 가장 높은 변수는 duration이다. duration은 전화시간이다. 전화시간의 길이가 이 모델의 예측에 가장 영향을 많이 미친다는 의미이다.

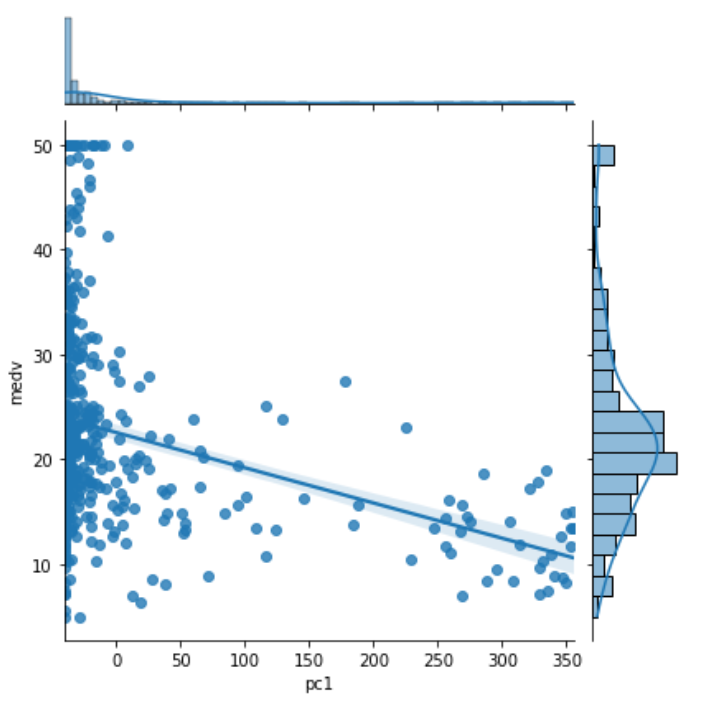

(2) dependence plot

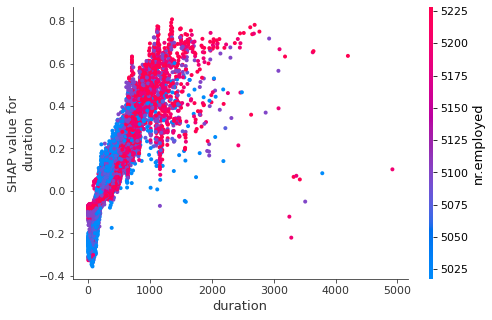

: 특정 input 변수와 target 변수와의 관계를 표현하는 것

: 점은 각각의 row를 의미(데이터 한개), 타겟변수에 미친 영향 = y

: 해당 변수가 어떻게 영향을 미쳤는지 섬세하게 볼 수 있다.

shap.dependence_plot( 'duration' , shap_values , data[input_var] )

duration의 그래프를 보면 duration의 대부분이 3000 미만에 존재하고, 그 중에서는 duration이 50이상쯤 되면 좋은 영향력을 끼쳐 1일 가능성이 높아진다고 해석된다. (shpa value for duration이 0보다 큰 데이터가 많음)

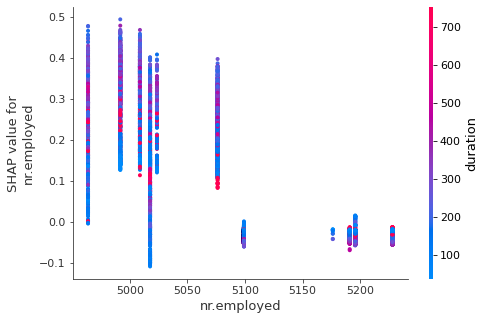

shap.dependence_plot( 'nr.employed' , shap_values , data[input_var] )

5020쯤 되는 지점에서 영향력이 음수가 된다. 그리고 5100이 넘어가고는 음수의 영향력밖에 없다. (-> 0일 가능성이 높음) 그 이전에는 영향력이 높으므로 좋은 영향력을 끼친다. (-> 1일 가능성이 높음)

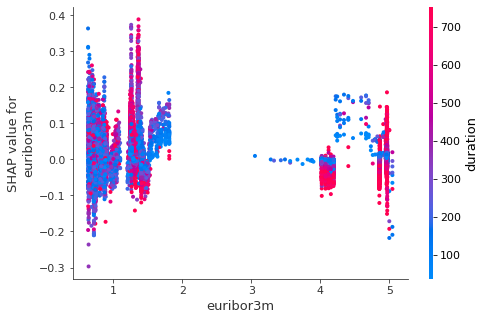

shap.dependence_plot( 'euribor3m' , shap_values , data[input_var] )

음수와 양수가 비슷하게 분포되어있는 것 같아 보인다. 이 중에서 음수가 얼마 없고 양수가 많은 구간을 찾아보면 1.3~1.4 - 2, 4-5 가 있다. 해당 구간일 때 1일 가능성이 높다고 해석할 수 있다.

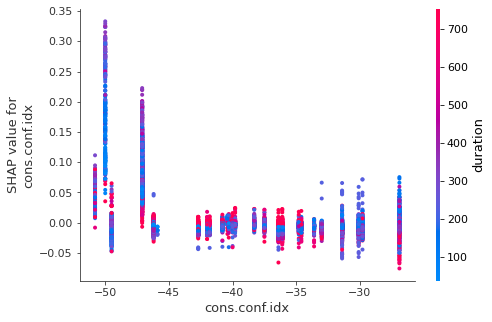

shap.dependence_plot( 'cons.conf.idx' , shap_values , data[input_var] )

전체적으로 음수를 이루고 있음을 알 수 있다. -45이하일 때는 1일 가능성이 높아진다고 해석할 수 있다.

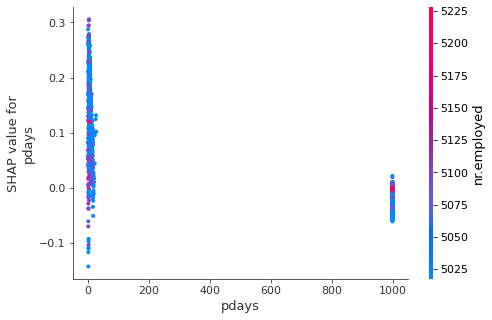

shap.dependence_plot( 'pdays' , shap_values , data[input_var] )

pdays가 0일때 대다수의 데이터가 1일 가능성이 높아질 것이라 예상할 수 있다.

(3) force plot

: 특정 값이 어떻게 예측되었는지를 시각화

prediction = xgb.predict(data[input_var])

data['pred'] = prediction

shap.initjs()

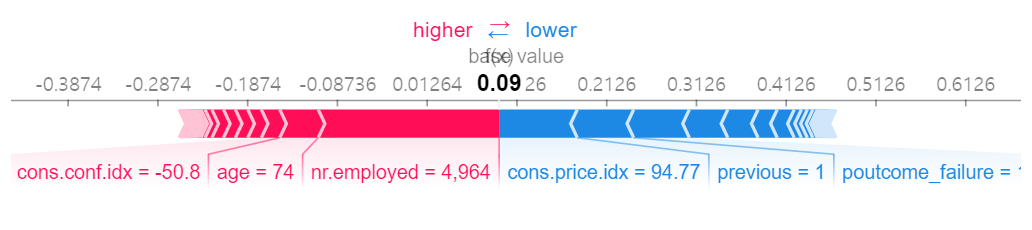

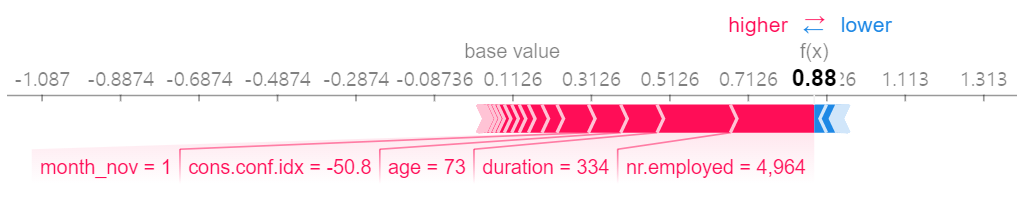

shap.force_plot( explainer.expected_value , shap_values[41187] , data[input_var].iloc[41187] )

411187번째 데이터는 0.09가 나왔는데, 떨어뜨리는 변수와 올리는 변수가 골고루 분포되어 있다.

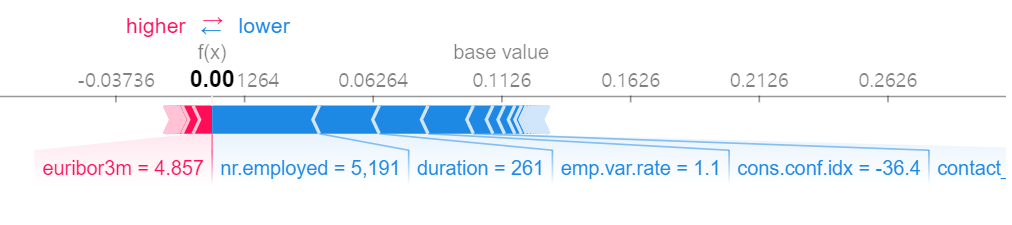

shap.force_plot( explainer.expected_value , shap_values[0] , data[input_var].iloc[41187] )

0에 거의 가깝게 예측된 0번째 데이터는 거의 모든 변수가 음수의 영향력을 끼친 것을 볼 수 있다.

41183번째 데이터는 양의 영향력이 훨씬 높은 것을 볼 수 있다. 따라서 0.88의 결과가 나왔고, 정답은 1로, 근접하게 맞혔다.

이렇게 shap 라이브러리를 사용하여 각 변수가 예측에 어떠한 영향을 미쳤는지 섬세하게 알아볼 수 있었다.

'데이터 분석 이론 > 머신러닝' 카테고리의 다른 글

| [rossmann data]상점 매출 예측/ kaggle 축소데이터 (0) | 2021.09.09 |

|---|---|

| [FIFA DATA] 2019/2020 시즌 Manchester United 에 어떤 선수를 영입해야 하는가?, EDA 과정 (0) | 2021.09.06 |

| [Bank Marketing데이터 분석] 2. python 부스팅 Boosting, XGBoost 사용 (0) | 2021.08.23 |

| [Bank Marketing데이터 분석] 1. python 배깅 , 랜덤포레스트 bagging, randomforest (0) | 2021.08.23 |

| [IRIS 데이터 분석] 2. Python Decision Tree ( 의사 결정 나무 ) (0) | 2021.08.20 |